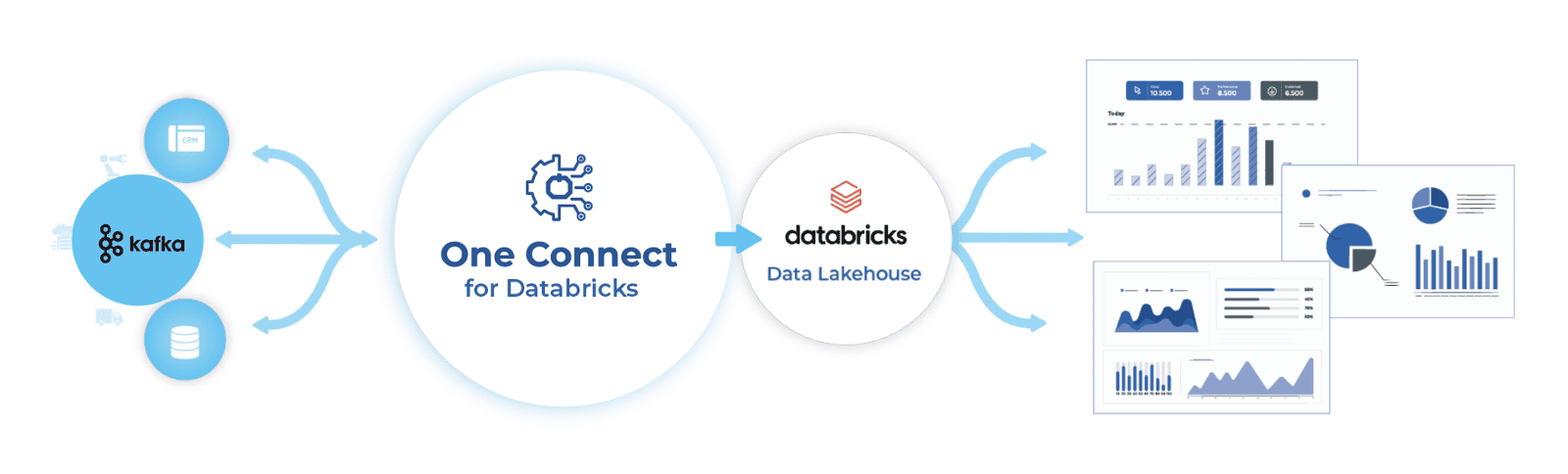

Real-Time Data Integration Made Easy

The Onibex Databricks JDBC connector facilitates the real-time transfer of data from Kafka for writing to DeltaLake Live Tables based on topic subscriptions. It enables idempotent writes with upserts and supports the auto-creation of tables and auto-evolution using the Schema Registry. This enables the ingestion of both historical and real-time data, which can then be processed using any of the available Confluent sink connectors. By establishing this connection, businesses can efficiently transfer data to other applications, databases, and analytics platforms, ensuring that the information is always up-to-date and available for real-time endless use cases.

The Onibex Databricks JDBC connector facilitates the real-time transfer of data from Kafka for writing to DeltaLake Live Tables based on topic subscriptions.

Idempotent Writes

The default insert.mode is INSERT. If configured as UPSERT, the connector will use upsert semantics rather than plain insert statements. Upsert semantics refer to atomically adding a new row or updating the existing row if there is a primary key constraint violation, providing idempotence.

Schema Registry

The connector supports Avro input format for Key and Value. Schema Registry must be enabled to use a Schema Registry-based format

Table and column auto-creation & evolution

Auto.create and auto-evolve are supported. If tables or columns are missing, they can be created automatically. Table names are created based on Kafka topic names.

Cloud Agnostic

Thanks to its container-based implementation, One Connect is platform-independent and can run on any operating system and cloud.

OAuth Supported

The connector supports the usage of OAUTH, a personalized token instead of a password. Databricks tools and SDKs that implement the Databricks client unified authentication standard will automatically generate, refresh, and use Databricks OAuth access tokens on your behalf as needed for OAuth M2M authentication.

Custom Catalogs and Schemas

The ability to access and customize various catalogs and schemas allows for highly flexible organization and management of data insertion. Users can tailor these elements to their specific needs, optimizing the handling and storage of information.

Not yet a Confluent Customer?

Start your free trial of Confluent Cloud today. New signups receive $400 to spend during their first 30 days—no credit card required.